Coding, Thinking and Adapting: My Take-Aways from Devoxx Poland 2025

Six years after my first Devoxx Poland visit, I was invited there again to present. This time, I had the opportunity to give two talks instead of just one. Apart from speaking, visiting a conference is a great way to also join other speaker’s sessions. In this blog, I will share some of the most inspiring talks I attended. Topics include mutation testing, monads, tips for smooth-running applications in production, effectively working with AI and hexagonal architecture. Continue reading for my recommendations from this year’s edition!

Mutation Testing: Did my test break my code ? 🤔

Maha Alsayasneh started her talk with a brief history of how we test software. First manually, then automated, adding metrics such as code coverage on line and branch level But even if you manage to reach 100% code coverage, does that mean you tested everything well?

This is where mutation testing comes into play. With mutation testing, a tool injects small faults into your code and sees if any test breaks. If so, we have a good test suite as it detects a potential bug. If not, this might be an indication that the test could improve.

In this talk, Maha demonstrated how to integrate this process into a Maven build with PITest.

The code, a trivial Calculator class in Java, made for a great example, as it did not distract the reader’s attention from the main concept of mutation testing.

Do or don’t. There’s no try. Or is there? The enormous power of monads practically explained

Sander Hoogendoorn promised to introduce the power of monads in a practical way. He started with a more philosophical discussion of what “value” software teams deliver. We like to think of code as business value, and encourage our teams to deliver business value by shipping code. But code itself isn’t business value, in fact, code is cost. Less code is easier to maintain, easier to test, easier to understand. As a bonus, the most secure code is code that you didn’t write. So we have plenty of reasons to write simple, concise code!

Back to monads. Apart from the theoretical discussion, Sander compares a monad to a box. The box takes away side-effects of your code - such as throwing exceptions, logging stuff, error handling. One can also pass functions to the box. Those functions do something to the thing inside the box. Executing a function inside the box removes the side effects from the place where you wrote the function to the box where you execute it. You can repeat this as often as you want. And then finally, you want to get the thing out of the box - and that’s the one place where you deal with the side effects.

3 years of Quarkus in production, what have we learned?

Despite the title, most lessons that Jago de Vreede shared in his early Thursday morning talk are generic lessons that apply to every technology stack. His team migrated an application to Quarkus and ran it in production for roughly three years by now. The migration went pretty smooth as the existing code base was based on Java EE and Microprofile. Quarkus implements those standards, so the most important steps where adding Quarkus and removing the Weblogic deployments.

The lessons that Jago presented cover a broad range of topics:

- network security

- version numbering

- logging, monitoring, traceability and observability

- software architecture

- testing

This talk was a great reminder that adopting new technologies should always be grounded in solid software engineering practices. It also was a great demonstration of how many challenges transcend specific frameworks.

It AI-n’t What You Think!

As a small surprise, the whole big room sung a traditional Polish birthday song to Venkat Subramaniam because he was celebrating his birthday. Apart from a few technical challenges in the first minutes, Venkat reflected on the position of artificial intelligence in our profession.

As a metaphor, he remembered how steam engines took decades to evolve, eventually transforming society. We can expect similar transformations because of artificial intelligence (AI). In fact, we might just be at the very beginning of how AI is reshaping our societies.

Taking a step back and keeping ourselves out the equation might change our perspective on the matter. It also allows us to clearly see the problem at hand, the solution or possible solutions. People who lived before the steam engine didn’t know how their world would change. People after the adoption of the steam engine don’t know any better than the steam engine had always been there. The most troublesome generations in this model are those that are in the middle of a change. In the case of AI, that would be our generations.

Alternative roles for AI

So let’s revisit what “AI” can also be, apart from artificial intelligence.

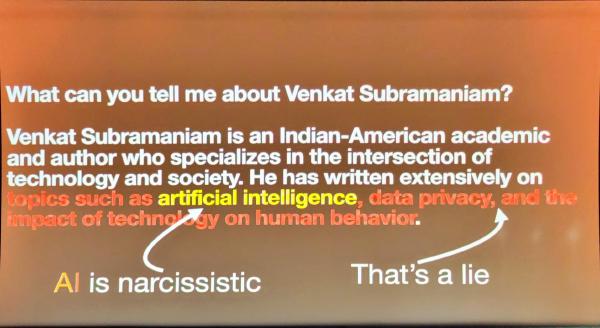

- a source of Alternative Information (with a touch of narcissism), as shown in the picture below.

Things Venkat Subramaniam didn't even know about himself! - an Awesome Investigator. Most AI tools - like most developers - are incredibly good in identifying issues in code that somebody else wrote. So you could feed code generated by AI and ask the same AI to review it instantly. Most models will come with good suggestions to improve the code they generated themselves.

- an Awesome Investigator. Large language models (LLMs) can of course quickly find information from a wide range of sources. They are able to scan through vast amounts of information in a fraction of seconds and come up with relevant results. But if you feed bad information and data into a model, the outputs of the model will not be any better than the inputs. Garbage in, garbage out, as we often say. Of course, there might be a good outcome despite the bad inputs, but out of multi-million possible options, the chance of a model generating correct, concise, quality code is almost zero.

But that brings us to a new problem: how can you tell “good” and “bad” outputs from an LLM apart? As a human, it is already hard to tell “good” and “bad” code apart. The problem here is that LLMs are built using unsupervised training. The principle of “garbage in, garbage out” certainly applies here, resulting in often debatable quality output.

As often said, “a fool with a tool is still a fool”, but the current situation makes it even worse: “a fool with a tool is a dangerous fool”. Why? Because the fool feels they achieved something awesome. When you ask an LLM to do something you’re an expert at, you’ll often find the outcomes awful. But when you ask a LLM to do something you’re a novice at, you’ll often be in awe with whatever it presents.

So.. what would be the power of LLMs in our work? LLMs could certainly help with ideation and delivering feedback on our own ideas. But we should definitely not expect it to come up with complete solutions. Paraphrasing Aristotle, considering such ideas won’t hurt, as long as we bring our educated minds to the room to assess them.

Taking a step back

What happens if we take a step back and consider the problem without us being a part of it?

The most important part of an application is the business data it processes, followed immediately by the business requirements. If we could get any tool to reliably generate quality application code from data and requirements, if we could truly rely upon such tools, how would that shape our profession? Like compilers, the tools that generate machine code from source code, what if LLMs generate code from data and requirements? Surely, some people concern themselves with the inner workings of a compiler and investigate how they can deliver even better machine code. Likewise, we would still have experts that investigate how LLMs could reliably produce quality code. But the vast majority of the IT industry does not produce machine code, or even care about the quality of the machine code a compiler generates. Maybe, somewhere in the future, we will not be producing much code? We don’t need “faster programmers” per se, we could equally well look for ways to improve the agility for business.

Pulling ourselves as professionals out of the equation, like we just did, allows us to reflect on what the industry needs. Let’s not blind ourselves by what we know today, otherwise we would fall in the same trap that Henry Ford observed when he noted that people ask for faster horses, not for cars.

The species that best adapt to their environment tend to survive. Venkat’s message was clear: sooner or later, LLMs will transform our profession. It is up to us to approach it with critical thinking, a willingness to evolve and a clear vision on how LLMs can help our problem solving skills.

Hexagonal Architecture in Practice, Live Coding That Will Make Your Applications More Sustainable

The final talk I attended was a live-coding session by Julien Topçu. He live-coded an application that adheres to the principles of hexagonal architecture. Before doing so, he briefly discussed the disadvantages of a lasagna architecture and how hexagonal architecture promises to address these. During the coding, he kept on explaining what he did and why he did it that way. An entertaining and educational way to wrap up my Devoxx Poland 2025 experience!

comments powered by Disqus