Devnexus 2024

This year, I again have the pleasure to join Devnexus and speak there. In this blog, I’ll highlight some of the sessions that I’ve joined.

To make navigation a bit easier, here’s an index of the talks that I cover in this blog.

- What makes software architecture so inTRaCtAble?

- Apache Maven 4 is Awesome

- Knowledge Management for the Technically Inclined

What makes software architecture so inTRaCtAble?

As in previous years, the opening keynote was delivered by Neil Ford. He started by discussing the difference between architecture and design. Many consider this a binary question, but he looks at it as a (somewhat messy) spectrum. Any decision about a software solution is located somewhere on that spectrum. On the one end are the strategic questions that require high effort to decide upon and deal with significant tradeoffs. On the other end, there are the tactical questions; they require low effort to decide upon and deal with insignificant tradeoffs.

When dealing with such questions, regardless of their place in the spectrum, you try to weigh the tradeoffs that are in play. This may not always be possible, in which case you may need to dive into various usage scenarios and work them out in the various design options.

Neil himself and Mark Richards wrote several books on software architecture. In one of them, Fundamentals of Software Architecture, they define the laws of software architecture. The first law states that

everything in software architecture is a trade-off.

You may think you found a situation where something isn’t a trade-off, but don’t be fooled: it just means you haven’t identified the trade-off yet.

Often, agile software development is positioned opposite to architecture. Some even say that working in agile situations means you don’t have to do architecture. This is certainly not the case! It only means you want and need fast feedback on your designs and your architecture. So “working agile” in fact means the same principles that apply to creating the software also apply to the architecture itself. As an architect, you want to have early feedback too on your designs, your decisions and your models. The key to all this is iterative design, being able to revisit earlier design decisions and improve upon them.

What is the right granularity of services?

Applying the above a concrete and often recurring question in software architecture: in a microservices architecture, what is the right granularity of those services?

To answer this question in a particular scenario, Neil presents two types of factors that may influence the answer:

- granularity disintegrator or factors that push toward breaking up one service into multiple smaller services.

- granularity integrator or factors that push toward combining a few services into one larger service.

| Granularity disintegrator | Granularity integrator |

|---|---|

| service functionality | (database) transactions over service boundaries |

| code volatility | data dependencies |

| scalability & throughput requirements | workflow and choreography functionality |

| fault tolerance requirements | |

| access restriction & security requirements |

Notes on some of those factors:

- Evaluating service functionality, or breaking up by behaviour, requires you to understand your domain really well.

- When evaluating code volatility, or how often the code will likely change in practice, keep in mind that the domain changes more frequently than generic plumbing code. As a result, domain code is less likely to be reusable over services.

- When evaluating data dependencies and workflow and choreography, remember that changes in one microservice may often ripple down to others. This in itself can be a reason for combining them.

Assessing the quality of your architecture

Coming back to frequently reviewing the architecture and collecting early feedback on it. As an architect, you need ways to assess the quality of the architecture. A few tools you can consider for this:

- fitness functions pre-defined measures of how well your architecture addresses a business or technical need or concern.

- monitors such as metrics, logs and other operational information on the runtime behaviour of your system.

- tests this is not only unit tests, but tests can also verify a chain of units, or be used to govern certain architectural principles.

- chaos engineering deliberately inserts chaos into the runtime behaviour of the system so you can observe how well the architecture deals with that.

What happens if there are no best practices?

Personal note: do best practices actually exist? Or should we be a bit more humble and say “proven practice”, adding context to a piece of guidance rather than claiming it will work in every situation.

Consider that most software projects are driven by business needs. Often, the main business driver, especially in agile projects, is time to market. This leads to a set of architectural characteristics or principles. If you find yourself addressing a particular case, you need to identify the characteristics that are in conflict, and you make a weighted trade-off. Ideally, such decisions feed back into the architectural decisions and maybe even into the architectural characteristics.

But, don’t forget about those business drivers and the context! This means that in the end, the design option with the most “pros” may not become the outcome of the trade-off analysis.

Apache Maven 4 is Awesome

The second talk I want to mention here was by Chandra Guntur and Rodrigo Graciano about Apache Maven 4. Even though Maven 4 is not yet out, Chandra en Rodrigo shared their insights on Apache Maven 4 and what makes them excited about it. They started out with basic introduction of Apache Maven and the Project Object Model (a.k.a. POM). They also highlighted at which point Maven settings live, whether or not you can change them and whether or not you should be using them.

Challenges with Maven 3.x and how Maven 4 addresses them

Next, Chandra and Rodrigo listed a few challenges that Maven 3 users may recognise:

- It’s painful to maintain versions in multi-module projects.

To make it a bit easier, you can use the flatten-maven-plugin or the ci-friendly-flatten-maven-plugin, but that doesn’t solve the root cause.- Use

${revision}instead of${project.version}to specify the version of the parent project in child modules. No additional plugins are necessary!

- Use

- There’s no way to separate build info from consumer info.

- Nothing to do! Sit back and observe how Maven 4 generates two pom files; one containing the build information, and one stripped to only the information that is relevant for consumers of the project.

- Handling versions of (dependency) sibling modules is a pain.

- Both the version of a parent module and the versions of sibling dependencies (i.e., dependencies on other modules in the same multi-module project) can be omitted.

- The default versions for official Maven plugins are defined by the version of Maven you use - not by the POM.

- Maven 4 issues a warning if you do not explicitly set the version of any official Maven plugin.

- Creating a bill of materials (BOM) is not easy.

- Maven 4 introduces a new packaging type specifically targeted at bills of materials.

To summarise, Apache Maven 4 is awesome - but of course, you already knew that :-).

Knowledge Management for the Technically Inclined

Jacqui Read started the second day with a talk about Knowledge Management. You often hear that knowledge management is vital to success, but what is knowledge management? Many people will think “documentation”, or even “just buy Confluence”, but that is definitely not what knowledge management is about.

So what is knowledge management? Let’s illustrate with a few examples:

- naming a source code repository, as this helps with discoverability.

- integrating applications with one another, as this connects concepts from different domains.

- lessons learned as this registers what did work and what didn’t in a particular case.

Knowledge management affects the way we produce software, so it should be ubiquitous:

| inventories | dashboards | policies & procedures |

| documents | expertise locator | wikis & articles |

| databases | meetings & workshops | forms & templates |

Back in 1994, Tom Davenport came up with the following definition of knowledge management:

Knowledge management is the process of capturing, distributing and effectively using knowledge.

Or, slightly refined by Jacqui herself:

Knowledge management is the process of capturing, publishing and effectively using knowledge.

The harsh reality, however, is that knowledge management is often ignored, forgotten or at best receives a low priority. As a result, there is no capturing, no publishing and no effective usage. This will eventually slow down organisations, allowing their competitors to rise.

Remote first as a driver for Knowledge Management

Since the pandemic, more organisations started working remote-first. But what does that buzzword mean?

- first and foremost, optimise for remote. This means everybody joins meetings in the same way, either digitally or physically, regardless of whether they are at the office or not.

- value output over time worked, allowing employees to have proper control over their work/life balance.

- a strong emphasis on communication

Why do organisations do this? Because there are some nice benefits attached to this orientation:

- business continuity: it’s easier to have for example a 24/7 support line because people can be located all over the world.

- improved productivity: people don’t have to pretend to be working if you truly value their output over time worked.

- better documentation: because a remote-first organisation can only work with asynchronous communication, the quality of documentation will likely improve.

About that last point: all three phases of knowledge management (capture, publish, effectively use) used to be done mostly synchronously. But since there is no moment that everybody in the organisation will be present, properly documenting knowledge becomes a requirement.

Tools and Best Practices

When working on Knowledge Management, you most likely need some tools to bring it into practice. For each of them, Jacqui shared a few recommendations to make them the most effective:

- glossaries

- make them centralised for discoverability.

- make them federated for maintenance, and reject the urge to have a gatekeeper so everybody should be able to maintain.

- partition them by domain, as one concept may have different definitions in different domains.

- add cross-references for simplicity and to prevent duplication.

- products over projects

- instead of organising by projects organise knowledge by products, as this helps for discoverability.

- when more projects work on a project, they can collaborate and share knowledge because it’s centred around the same product

- inventories of knowledge

- explicit knowledge can easily be written down

- implicit knowledge is harder to write down

- capture what, where, who, structure and purpose of the knowledge

Automating Knowledge Management

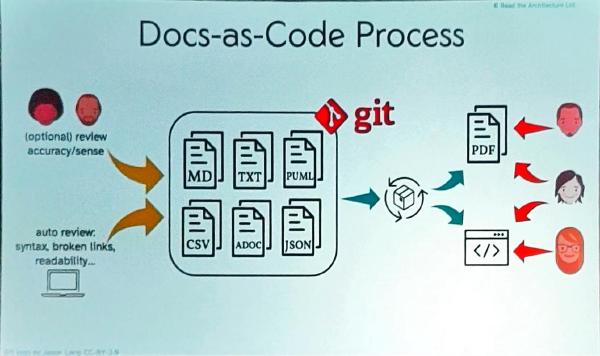

Effective Knowledge Management means you need to automate the repetitive work. Good candidates for this are processing the structured, yet heterogeneous information to a more uniform output format. Also, things like syntax, broken links and readability can be checked automatically. Review for accuracy and correctness of information, on the other hand, can’t easily be automated.

Knowledge Management Maturity

There are various levels of Knowledge Management maturity. In increasing order of maturity:

- chaos → this implies a high cognitive load on both the producing and consuming side of knowledge.

- knowledge management → this level still implies a significant cognitive load, but it gets better already.

- the “hive mind” → on this level, both producing and accessing knowledge only requires a low cognitive load, making it easily available for everyone who needs to access it.

Why is it called the “hive mind”? Because at this level, it requires involving as many minds as possible! This implies highly automated knowledge management, both on a personal level and on a product level. As a result, the organisation builds wisdom. Wisdom builds on knowledge, which builds on information which eventually builds on data.

Collaborative Knowledge Management approaches

When working collaboratively on Knowledge Management, there are a few approaches you can use. I’m not going to discuss the approaches here, only what effect they may help to achieve.

- big picture event storming helps break down silos and extract implicit knowledge out of people’s head.

- domain storytelling is also useful for converting implicit knowledge to explicit knowledge.

- byte-size architecture sessions, due to their nature, allow for all voices to be heard, not only the loud ones.

- the 6-page memo ensures everybody is literally on the same page before discussing a topic.

- architecture decision records avoid problems with changing important decisions later, onboarding and staff turnover. a variation on this, the business decision records can help to record procurement, security, hiring or strategy decisions; but it also simplifies future decision-making.

Lessons learned

- Good Knowledge Management is vital for building & understanding any software.

- Collaborate to collect and record knowledge, and ask for a 360-degree review.

- You need to elicit the implicit knowledge because otherwise, you’ll miss it.

- Knowledge Management supports better decision-making; writing down documents isn’t a goal on its own. We do it to better support better decision-making in the future!

- Engineer knowledge as much as you engineer software, enabling you to later focus on the content.

- Own your knowledge - know what you have and where to find it.

- If you want to own your architecture, you need to own your knowledge first.