Jfokus, Day 2

The second day of Jfokus is just as action-packed as the first one. However, part of the action is me giving two talks. Both of them scheduled today, so a little less time for attending other sessions and blogging about them. I did attend some other sessions after lunch time, on which I’ll report below.

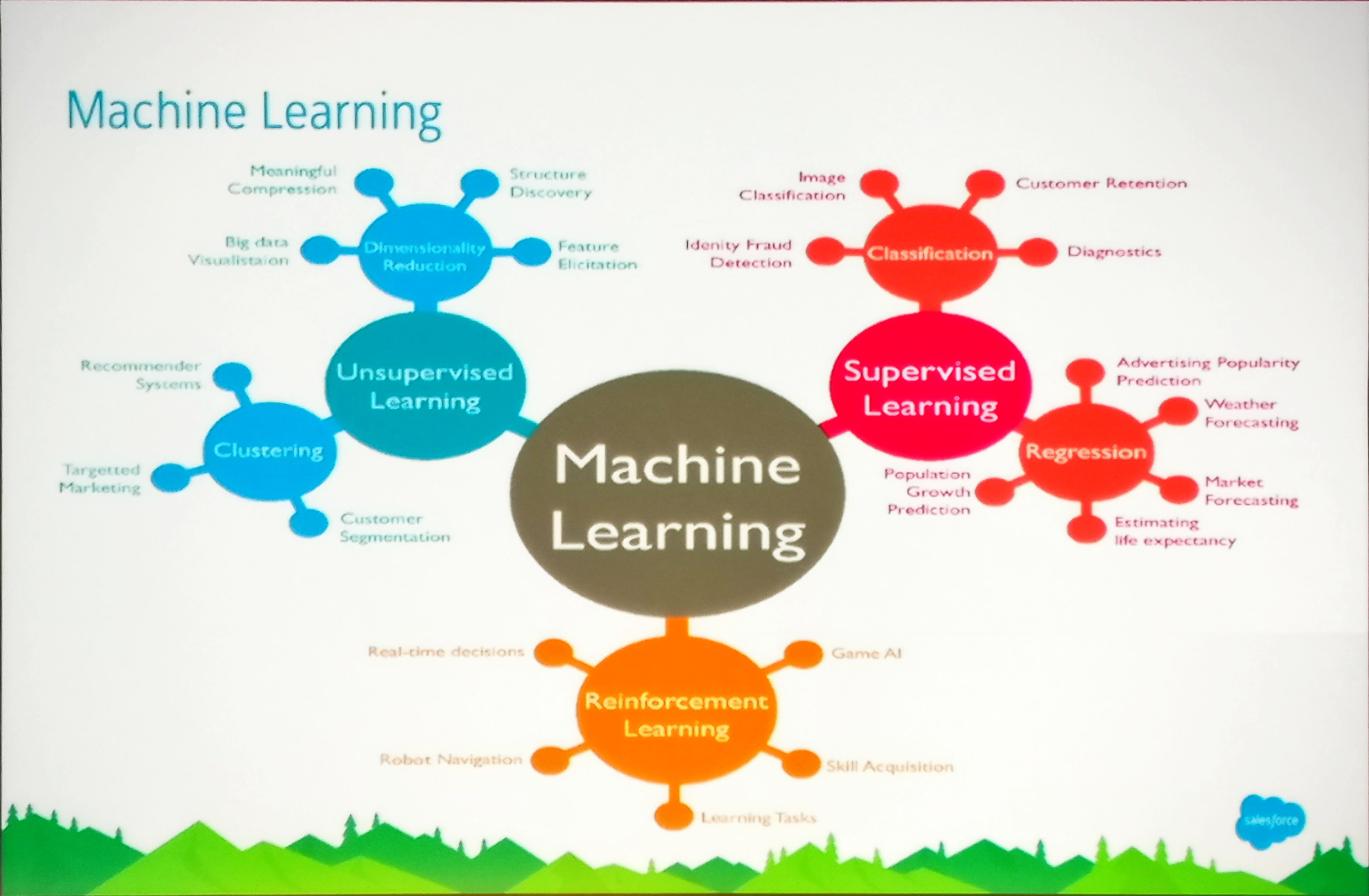

Introduction to Machine Learning

Directly after the lunch, James Ward gave an introduction to machine learning. He started with a very recognizable story about how humans (in casu his daughter) learn new facts about the world around them. Machine learning is in fact no different than human learning: building a model, trying something, seeing whether and how it changes reality and updating the model.

As an example, let’s take product recomendation (or collaborative filtering in more mathematical jargon). Historically, developers would be tempted to just write a lot of code that would solve that problem for them. But in these days, it’s more common to build a model that you could ask questions instead. In this example, Prediction IO (an open source machine learning server) is used, next to Apache Spark and Spark ML. Given a relatively small dataset, you can do predict which users will like which products based on the assumption that they have something in common when they like the same products. Sometimes you might even be aware of the reason why users liked a product, for example some traits that the product has or traits the user has. But most of the times, you do not, and your dataset is way too large to investigate the exact traits of either the products or the users. In fact, the traits that products have as well as the traits users have can be visualised as matrices; combining them should give a matrix of recomendations.

Alternating Least Squares

In this technique, random data is inserted into the model, because you don’t know either your product traits or your user traits. We call that latent features: traits to products and users that we know exist, but don’t know what they exactly represent. This way, a model is calculated about which users like which products; you can then verify whether it matches the actual products that were liked by your actual users.

The fun thing is that you don’t even know what the exact traits are, either for your products or for your users; but you don’t need to know, either! The model is trained by performing a dot product on the product-trait matrix and the user-trait matrix. Using matrix factorization we can build a matrix with users and products, where each cell is the likelihood that a user will like a product. Using Spark ML, it would roughly go like this:

val favorites: Seq[Favorites] = ...

val ratings = favorites.map(fav => Rating(fav.user, fav.prop, 1))

// rank is the number of features we expect products to have

val (userFeatures, productFeatures) = ALS.train(ratings = ratings, rank = 5)

val predictions = userFeatures * propertyFeatures // blas.sdot

val predictionsForUser = predictions.filter(_.userId == userId)

You could even write a unit test where you would create for example 5 users and 5 products to train a lightweight model. Of course you cannot verify the exact score for recommended products. The very best you can do is verify that some products are recommended higher or lower than other products. You can also verify that your prediction gets more accurate if you work with a higher number of latent features. For examples of that, see GitHub: jamesward/dreamhouse-sparkml

Spark ML also has a way to visualise the matrices with user traits and product traits. Again, this only works for small datasets, you’ll get way too much data otherwise which you would not be able to manually verify :).

The bigger the model gets, the more interesting it would be to store and re-use the model so it can easily be queried by other applications for example. Prediction IO also has a template gallery which you can browse to see what machine learning technique might be useful for the problem you’re trying to solve. It also encapsulates the model training and querying stuff by exposing that functionality over REST endpoints. This way it’s easier to re-use models in multiple applications.

Graal @ Twitter

Shortly after the coffee break, Chris Thalinger gave a quick intro about Graal and how it is used at Twitter. As Chris explained, Twitter runs many of its code in a custom Java VM based on the OpenJDK. Graal is intended as a replacement for C2, the part of the HotSpot VM that was originally intended for long-running, predominantly server-side application. After discussing a few bugs in Graal he helped squashing, he shared some graphs about performance of both C2 and Graal.

These graphs showed different performance characteristics, like GC times and user/system CPU-time ratios. Interesting detail: the graphs didn’t have a Y-axis since you could deduce information about the number of tweets from some of them. Since Twitters system obviously run 24/7 under relatively high load, it was interesting to see different compiler implementations and the effect it has on performance.

Blah blah Microservices blah blah

Since I needed to leave halfway the closing keynote by Jonas Bonér, I’ll report on that later when I watched the second half on YouTube. The first half was quite interesting and thought provoking, so stay tuned!

comments powered by Disqus