Jfokus, Day 1

These days, I’m in Stockholm, attending and speaking at the Jfokus conference. Yesterday night was a great opportunity to get to know a few other speakers during dinner. We were even surprised by an act of the Lemon Squeezy barbershop quartet singing for us - very beautiful!

But today, the serious stuff started. In the following sections, I have written down my notes and observations of each of the sessions I attended.

Java 9: Make Way for Modules!

After a fascinating triple-drumset-opening, Mark Reinhold from Oracle took the stage. In about one hour, he discussed the evolution of the Java Platform over last releases. For starters, he reviewed the last major releases of the Java Platform. With each of them, he explained which major painpoint – mostly from the developer perspective – was addressed with that release. But the main course was by far the most interesting, explaining how JDK 9 is addressing two of such painpoints: the classpath and runtime monolyths. Very interesting materials, with an incidental joke in between.

Become a ninja with Angular 2

This session started off with a good introduction in the French language. Very helpful since Agnes Crepet and Cédric Exbrayat indeed had quite a French accent :). It also included a few less-polite (but even more useful) sentences.

Next up was a small introduction into the differences between Angular 1 and 2. Interesting to see that components in Angular 2 are not supposed to communicate with each other using a shared scope. That was something that gave me some headaches back when I used AngularJS; so it’s nice to see that should be gone by now.

It was also great to see that the digest cycle has disappeared. Of course, there was the dreaded Maximum iteration limit exceeded. message. Although maybe less obvious, this could also be a source of performance issues. With AngularJS 1.x re-digesting up to ten times, if you have a lot of watches in your application, this could easily lead to a lot of unneeded computations. This especially affected larger applications! With Angular 2.x, zone.js has been introduced that makes re-digesting no longer necessary.

It was also nice to see that not only the TypeScript sources, but also the HTML templates, are type-checked during build and then translated into raw JavaScript. This is not only a nice way to prevent type errors (especially in your templates), but it also enables a faster application startup. It also obsoletes the need to ship the template compiler to your end users (which used to be the case in Angular 1.x), thus reducing the size of your application.

Value Types – The Next Big Thing for Java

Although Java 9 isn’t even released officially, Henning Schwentner gave a nice after-lunch introduction to values types. The blue faces and other strange colors in his presentation didn’t really hurt the message about what might be introduced in Java post-9. Java, as we currently know it, is an object-oriented language, meaning it’s modelled around objects: reflections of things that are created, change of time and finally are destroyed and cleaned up. Classes describe how these objects look like, what their properties are and what methods they have. Objects are identified by their memory address.

Values live on the stack. As a consequence, you can calculate how much memory you need when compiling the source code. That makes memory management relatively easy. The heap, where objects live, is different. You can’t tell in advance how much memory is needed there. That’s what memory management is for, and that’s why we need a gargage collector. The only type of values that Java currently knows are primitives. If you need semantics (e.g. an IBAN, IATA-code, GPS coordinate), you need to write a class that encapsulates the actual value. As a consequence, memory usage increases since you need to store references to the value as well as the value itself. For arrays, a similar overhead is needed to keep track of which value lives where in the array.

Value Types in Java

The answer to at least some of these issues is a value type: it “works like an int, codes like a class”. Coding such classes has a few restrictions, though:

- you must override

equals()– otherwise we would have reference equality; - all instance variables must be

final;

Since they work like a value, you can’t instantiate them using new – because that is traditionally reserved for creating objects on the heap.

The final syntax isn’t specified yet.

One of the proposals would be to instantiate them using some kind of tuple syntax (if you’re familiar with Scala), which would look like final Point point = (4, 7).

That looks pretty elegant to me, at least better than one of the other proposals, which looked like final Point point = __makeValue(4, 7).

Since value types aren’t reference types, they cannot be null.

At the end of the day, a value type is constructed from primitives.

So the proposal is to define the default value as the (recursive) default value of all the underlying primitives.

Again, that seems pretty neat to me.

Comparing with other languages

In C++, you can specify for every variable whether it should live on the stack or on the heap.

By using the new keyword, it will live on the heap; otherwise it will live on the stack.

In C#, there’s also a struct concept.

These aren’t immutable, since you can change the state of a struct after it was created.

Also, since they can’t be null, the concept of Nullable<T> was introduced, which feels a bit unfamiliar.

Relation to Domain Driven Design

Value types could be a nice completement to techniques like Domain Driven Design. The combination would be like having the cake and eating it:

- it would allow to express different concepts from the business domain (for example,

AmountandAccount) with more semantics than just primitive values - on the other hand, it wouldn’t automatically incur the overhead related to memory management and the like that currently comes with using classes and objects.

The DevOps disaster: 15 ways to fail at DevOps

Next-up, a talk by my ex-colleague Bert Jan Schrijver on disasters in “doing” DevOps. Since he didn’t have a recipe for success, he decided to share 15 ways not to do it. We know to start with why, so why “do DevOps” in the first place? It helps you to move faster, focus on value instead of problems. Historically, there’s been a conflict and friction between change and stability because server hardware was expensive and everything was done to keep it running smoothly. Change was the threatening factor in that era. These days are now long gone – if you have a credit card you can get as many hardware at your fingertips as you could possibly wish. But being responsible about them is still required, as is having the right skills to do that.

Some of the disasters are actually a bit funny. That goes for the anecdotal story about his two kids performing “monkey testing” on furniture. Or for the BA-style quote about tooling. But apart for that, the talk had quite a bit of food for thought. How do we look at DevOps? How do we actually make sure everyone in the team is feeling the same responsibility when sh*t hits the fan? How do we make it possible for everyone in the team to take their responsibility?

Apart from that, Bert Jan also shared some thoughts on how to move towards DevOps. Do you have management support? Is everyone and are all departments involved? How do you react when failure happens? Do you blame people or reserve a good parking spot for them? In a real DevOps culture, there’s never just one person to blame, since we have a shared responsibility. Code has been written, reviewed, tested and deployed: that makes at least four people responsible.

At the end of the day, DevOps is more about empathy and culture way more than it is about tooling and processes.

Expect the Unexpected: How to Deal with Errors in Large Web Applications

During the afternoon coffee break a quicky by Mats Bryntse on error handling. Regardless of whether the errors occur in your code or in the frameworks / libraries you use, it will be a bad user experience either way.

The window object gets an error event when something bad happens.

So an easy approach would be to listen to those events:

window.onerror = function (msg, url, line, col, error) {

};

// or

window.addEventListener('error', function(event) {

}, true); // last argument also notifies you of load errors, tag errors, ...

But knowing an error occurs is one thing, fixing it is a second thing… As developers, we have quite a wishlist when it comes to reproducing problems. To put it differently: “a live breakpoint is worth a 1000 callstacks.”

A simple approach would be to fetch a server side with the callstack as a request parameter.

At least you’ll be notified when something goes wrong, and you have data.

But interpreting the data is rather hard, and you don’t know what happened before the error occured.

In its most simple form, new Image().src = '/log.php?data=...' will probably do…

But in the end of the day, whether you roll your own or have some off-the-shelve solution, it’s more about awareness than real problem solving.

Commercial tools are available that also record the context and the things that happen in the users browser. It might seem like an interesting development, but it raises a concern with me when it comes to privacy. If a developer can replay every action and see every part of the screen that the user saw, it’s a nice world for the developer. But if when working e.g. on an internet banking system, or a hospital website, developers shouldn’t see everything the user sees. In fact, they aren’t even allowed to. How would such systems deal with that? Or would it mean you need to roll your own solutions – again?

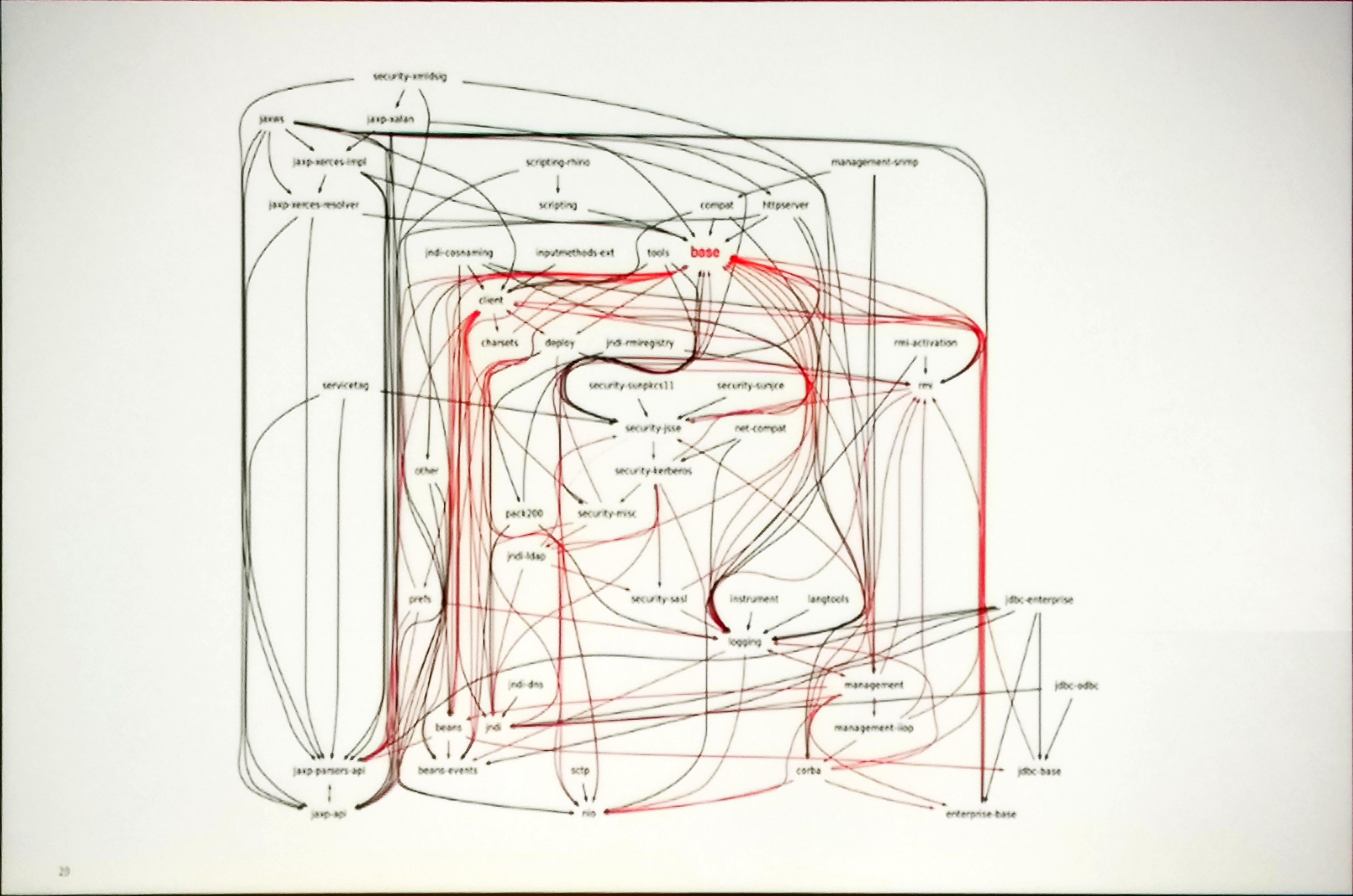

Visualizing Java code bases

Next up was Andrey Adamovich talking about visualizing code bases. Getting to know a code base is pretty hard. The challenge is even bigger for code bases that have a big history. In fact, the current state of a code base is a combination of historical state and changes applied. When analyzing it with tools like SonarQube it doesn’t always get better. If you didn’t think upfront about what rules you want to apply, you’ll probably be faced with multiple years of technical debt when you run it the first time.

So what are your options?

- Size does matter: count the lines of code. Calculate the ratio between comments and code. That is not to say more is better: a public API obviously needs a lot of comments. But otherwise, it might be a hint that people didn’t take the time to (re-) structure their code properly!

- Visualize – but do not use Excel for that :). A tool that can be of use for this goal is d3.js, but there are others, too. For example, a Hierarchical Edge Bundling chart can be used to show which files are often changed together (see below as well).

- Temporal Analysis is pretty well-known from GitHub and the like. It attempts to visualize events to a code base over time. Gource is an open-source tool that can create a video of how your source set(s) change over time. Just like movies, you might have to watch them a few times before you get the meaning of everything that happens in the generated videos :).

- Code Maat is another tool that analyses VCS logs. It requires a very specific input format (which is well-documented on its site) and outputs a CSV-file. For example, you can use it – again – to find files that often or always change together. That might be a pointer towards (too) strong coupling.

- Another interesting way of visualising data is using Elastic and Kibana. For example, you can use it to see whether a file is changed by a lot of people or by just a few. It could be a sign that it’s coupled to a lot of other things, but it may just as well show that people collaborate – which is obviously not a bad thing.

- Quite a different tool is jQAssistent which treats your code base as a graph. You can then run graph queries on your code base, e.g. a simple one to find the number of methods per class. Thanks to the Neo4j web interface you can get the visualisation for (almost) free.

Using these techniques, you can find so-called hot spots in yout code base. You can use this list as input for

- finding maintenance problems

- risk management

- code review candidates

- determining targets for exploratory tests

Scaling the Data Infrastructure @ Spotify

As a nice end of this first day, Matti Pehrs talked about data infrastructure at Spotify. After an anecdotal story about curtains that resolve data problems Spotify came to the point where they were running out of physical space. It forced them to make a choice: build a bigger data center, or move to the cloud. Since Spotify wanted to focus on music streaming, not on operating data centers, they choose the latter.

Data at Spotify scale is quite impressive. With 100 million active user per month, 30 million songs, 2 billion playlists and 60 countries you’re not even there. Because the amount of data keeps on increasing. Users generate around 50 TB per day. Developers and analysts are producing even more: around 60 TB per day. Data is also at the heart of operations: features are rolled out using A/B-testing before being rolled out completely. That kind of testing is also data-intensive. The relations between data, processing jobs and actual team focusses is almost impossible to visualise, let alone to understand.

One of the causes to the 2015 summer of incidents was the fact that the Hadoop cluster had a lot of edge nodes that would schedule reporting jobs using Cron. More often than not, those jobs were scheduled on either the whole or the half hour. Especially when the cluster was restarted for whatever reason, these edge nodes would effecitvely DDoS the whole cluster. The solution to that was as simple as crude: just create firewall rules that temporarily would block certain network segments.

In all honesty, the speed of the talk was a bit too high for me at this time of the day. I did however take away the two lessons presented at the end:

- Make sure you have the right tools to deal with data-related incidents.

- Remember that your capacity model can fail if you’re using it at a bigger scale than expected – automate all the things :).